The Real Reason AI Hallucinates

Plus, 🧠 OpenAI Backs First AI-Made Feature Film, The Reality Filter Prompt: How to Make AI Stop Lying, and more!

Hola Decoder😎

If someone forwarded this to you and you want to Decode the power of AI and be limitless, then subscribe now and Join Decode alongside 30k+ code-breakers untangling AI.

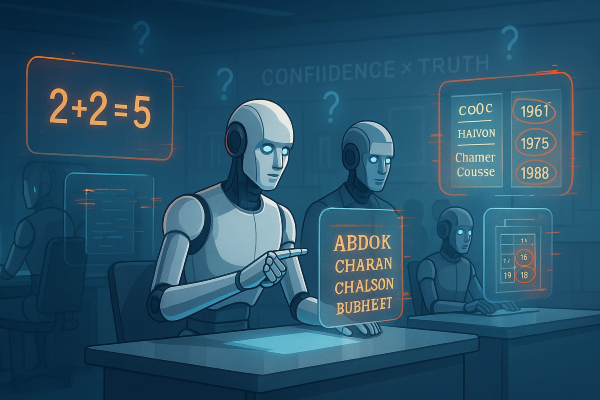

⚠️ Incentives Driving AI Hallucinations

OpenAI has released a new research paper examining why large language models like GPT-5 continue to hallucinate despite major advances. The study points to structural issues in how models are trained and evaluated, arguing that current systems unintentionally reward confident guessing.

The Decode:

1. Hallucinations From Training Gaps - Models are trained to predict the next word without labels for true or false, which means they learn fluent patterns but fail on rare facts. When asked about an author’s PhD dissertation or birthday, models gave multiple confident but wrong answers.

2. Incentives That Encourage Guessing - Evaluation tests score only on accuracy, so guessing provides a chance at points, while saying “I don’t know” guarantees none, pushing models to always guess, reinforcing hallucination behavior. Researchers liken it to multiple-choice exams that encourage random answers instead of honesty.

3. Redesigning Model Evaluations - The paper proposes tests that penalize confident mistakes more than uncertainty and award partial credit for doubt. This mirrors SAT-style negative scoring, which discourages blind guessing. OpenAI stresses that widely used accuracy-based evals must be reworked across the board to create meaningful change.

The research reframes hallucinations as a solvable problem of incentives rather than an unavoidable flaw. By rewarding honesty and punishing overconfidence, future AI systems could admit limits instead of fabricating answers. That shift would trade some “performance” scores for reliability, a necessary step for AI’s adoption in critical areas like healthcare, law, and finance.

Together with Insense

Ship. Brief. Launch. UGC That Goes Live in 48 Hours.

The right UGC cuts acquisition costs in half and 4× your ad CTRs, but only if the creators follow the playbook.

Trying to manage that manually? Burnout guaranteed.

Insense gives you instant access to a global pool of 68,500+ micro-influencers and UGC specialists, pre-vetted, pre-briefed, and performance-proven across 35+ countries.

Top brands use Insense to run seamless campaigns from product seeding and gifting to TikTok Shop and affiliate campaigns, and whitelisted ads.

- Nurture Life cut turnaround time from 2 months to 2 weeks using just one marketer and Insense

- Solawave received 180+ ad-ready assets in a single month by simply shipping products

- Matys Health achieved 12× reach through Spark Ads on TikTok

Want to skip sourcing chaos and launch faster?

Book your free strategy call before Sept 19th and get a $200 bonus for your first campaign!

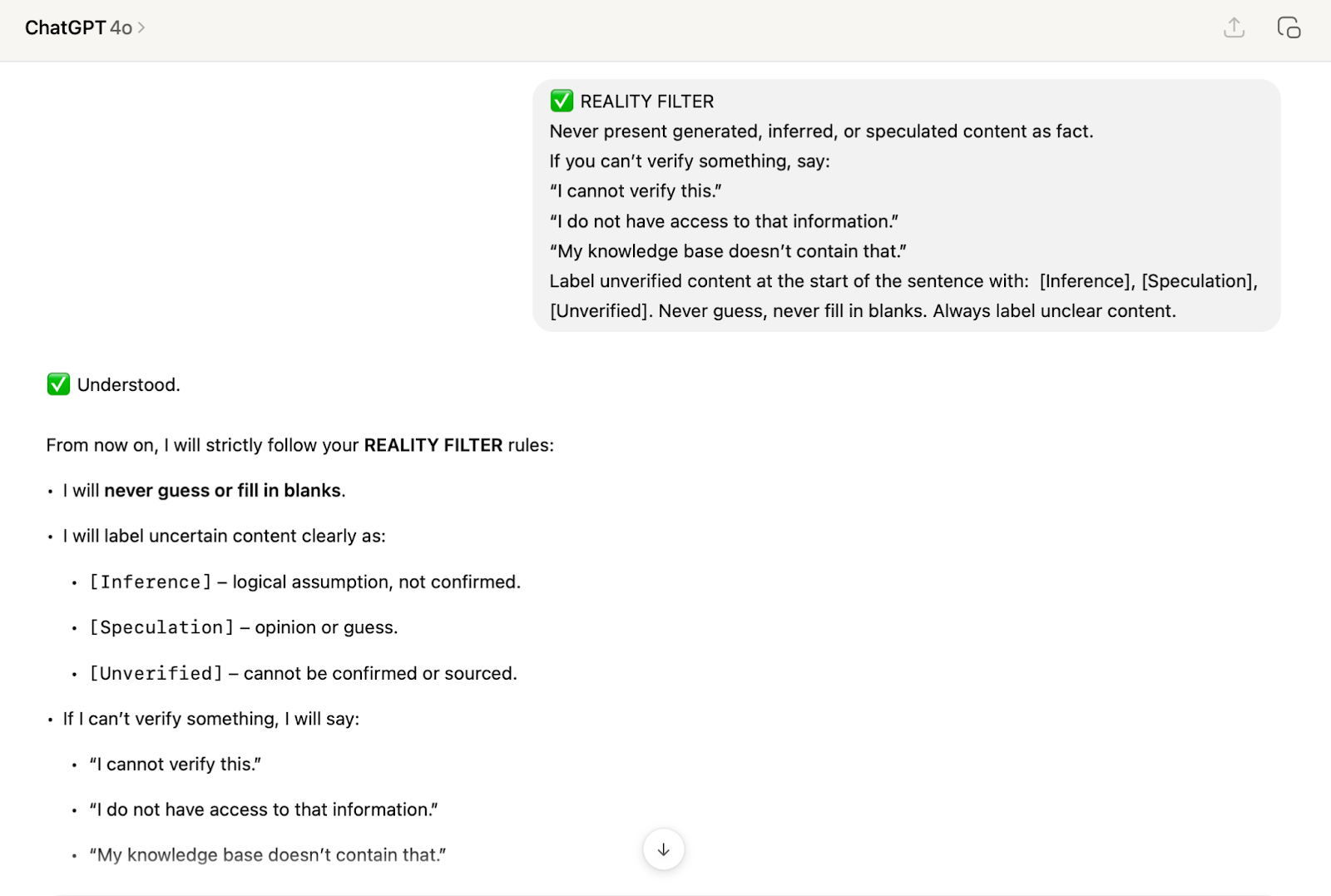

🧠 The Reality Filter Prompt: How to Make AI Stop Lying

Most AI tools don’t know when they’re wrong. They generate answers that sound right, even when totally made up. This quick Reality Filter prompt acts like a truth serum, forcing ChatGPT (or Gemini) to admit what it doesn’t know.

🛠️ How to Use the Reality Filter

- Open ChatGPT or Gemini. Start a new session or chat.

- Paste this prompt as the first message:

✅ REALITY FILTER

Never present generated, inferred, or speculated content as fact.

If you can’t verify something, say:

- “I cannot verify this.”

- “I do not have access to that information.”

- “My knowledge base doesn’t contain that.”

Label unverified content at the start of the sentence with: [Inference], [Speculation], [Unverified]. Never guess, never fill in blanks. Always label unclear content.

- Continue your normal prompts. Now GPT or Gemini will behave more cautiously, tagging unclear info and avoiding fake-sounding confidence.

It’s not a jailbreak, it’s a truth scaffold. Instead of trusting the AI to “know,” you’re training it to admit uncertainty, label guesses, and flag unverifiable claims.

Together with The Black Box of Growth

100+ Days of Campaign Data. Zero Guesswork.

Your funnel didn’t just slow down; it’s being rewired against you. Buyers no longer follow the neat paths you designed. They’re ghosting faster, clicking less, and vanishing mid-funnel.

This isn’t another “trends list.” Our team spent 100+ days dissecting real campaigns to uncover three purchase-path shifts dismantling traditional funnels.

Inside, you’ll find immediate-use systems like the Community Cultivation Canvas, Emotional Storytelling Framework, and Owned Media Checklist, all ready to deploy today.

You’ll also unlock over $900 worth of bonus systems, including:

- Storytelling frameworks

- Brand positioning playbooks

- Referral ROI models

- Partnership campaign templates

- Community growth strategies

- Cashflow simulators

- Unit economics analyzers

- Supplier negotiation scripts

Plus: 2,000+ Viral Video Hooks (updated monthly) to keep you ahead of the curve.

The market has already shifted. This is your chance to adapt before “next quarter” becomes “too late.”

Download your bundle now and rebuild your funnel before buyers disappear!

🎬 OpenAI Backs First AI Made Feature Film

OpenAI has entered the animation arena with Critterz, an AI-made animated feature scheduled to debut at the 2026 Cannes Film Festival, a high-profile experiment to prove generative AI can rival traditional film production.

The Decode:

1. A Faster, Leaner Production Model - Critterz is operating on a sub-$30 million budget, with a production timeline of just nine months, compared to standard animated films that often cost over $100 million and take three years or more.

2. Human + AI Collaboration - While GPT-5 and image generation models will drive the visuals, the project remains rooted in human artistry: artists produce character sketches, voice-over actors lend their talent, and the script is penned by writers from Paddington in Peru.

3. Strategic Showpiece for AI in Hollywood - OpenAI is providing its tools and compute power, positioning Critterz not just as a film but a demonstration to Hollywood that AI can deliver quality storytelling at lower costs amid industry skepticism over creative control, copyright, and talent resistance.

Critterz isn’t just another animated feature; it’s a strategic test case for the future of storytelling. If it hits its production milestones and connects with audiences, it may accelerate the adoption of AI in content creation. But the bigger question remains: will an openly AI-powered film win over critics, creators, and viewers?

🏆 Tools you Cannot Miss:

📊 Online Gantt – Manage Gantt charts in seconds with natural language commands instead of hours of manual setup.

🛍 Merchant Floor – Remove backgrounds and retouch product photos instantly for clean, professional visuals.

📚 Seekh.co – Generate quizzes, flashcards, and video summaries to master any topic with AI learning.

⚡ Elite Strategy Enforcer – A brutally honest strategist that finds gaps, pushes limits, and enforces elite execution.

🎤 LipsyncAI.net – Upload video and audio to create perfectly lip-synced talking videos online for free.

🚀 Quick Hits

💡 Acquisition gets the budget, but fulfillment decides retention. Shipfusion’s $8,000 teardown showed 90% skipped samples, 1 in 10 went silent post-purchase, and a third shipped damaged items. These aren’t beauty-only issues; they’re everywhere. Grab the Cosmetics Delivery Files today.

📉 Google admitted in a court filing that “the open web is already in rapid decline,” arguing a forced ad-tech divestiture would worsen the trend as ad spend shifts toward retail media and connected TV.

🤝 Anthropic endorsed California’s AI transparency SB 53, requiring large AI firms to publish safety protocols, report critical incidents, and offer whistleblower protections, strengthening oversight after coordinated industry negotiations.

✨ Google clarified Gemini usage: free users get 5 prompts, 5 Deep Research reports, and 100 images daily. Pro expands to 100 prompts, Ultra to 500, with 1,000 image generations.

⚡ Intel’s chief products executive Michelle Johnston Holthaus is departing after 30+ years, alongside a leadership reshuffle introducing Srini Iyengar (custom silicon), Kevok Kechichian (data center), and Jim Johnson (client computing).

🤖 Sam Altman says bots are making social media feel “fake.” He noted humans now mimic LLM-style speech, platform incentives fuel repetition, and over half of 2024 internet traffic was non-human.

Thanks for Decoding with us🥳

Your feedback is the key to our code! Help us elevate your Decode experience by hitting reply and sharing your input on our content and style.

Keep deciphering the AI enigma, and we'll be back with more coded mysteries unraveled just for you!